Introducing new Ultra-Realistic AI Voices from PlayHT!

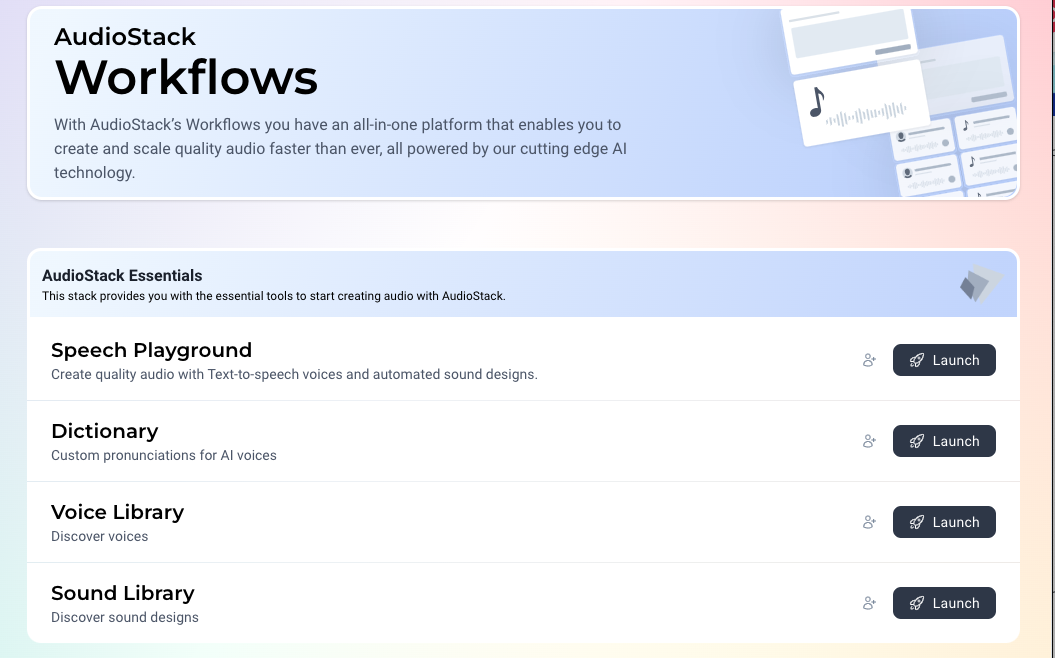

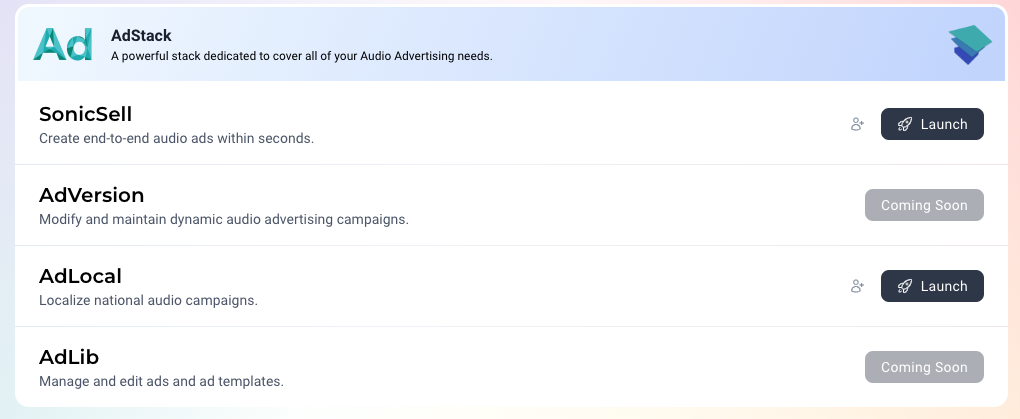

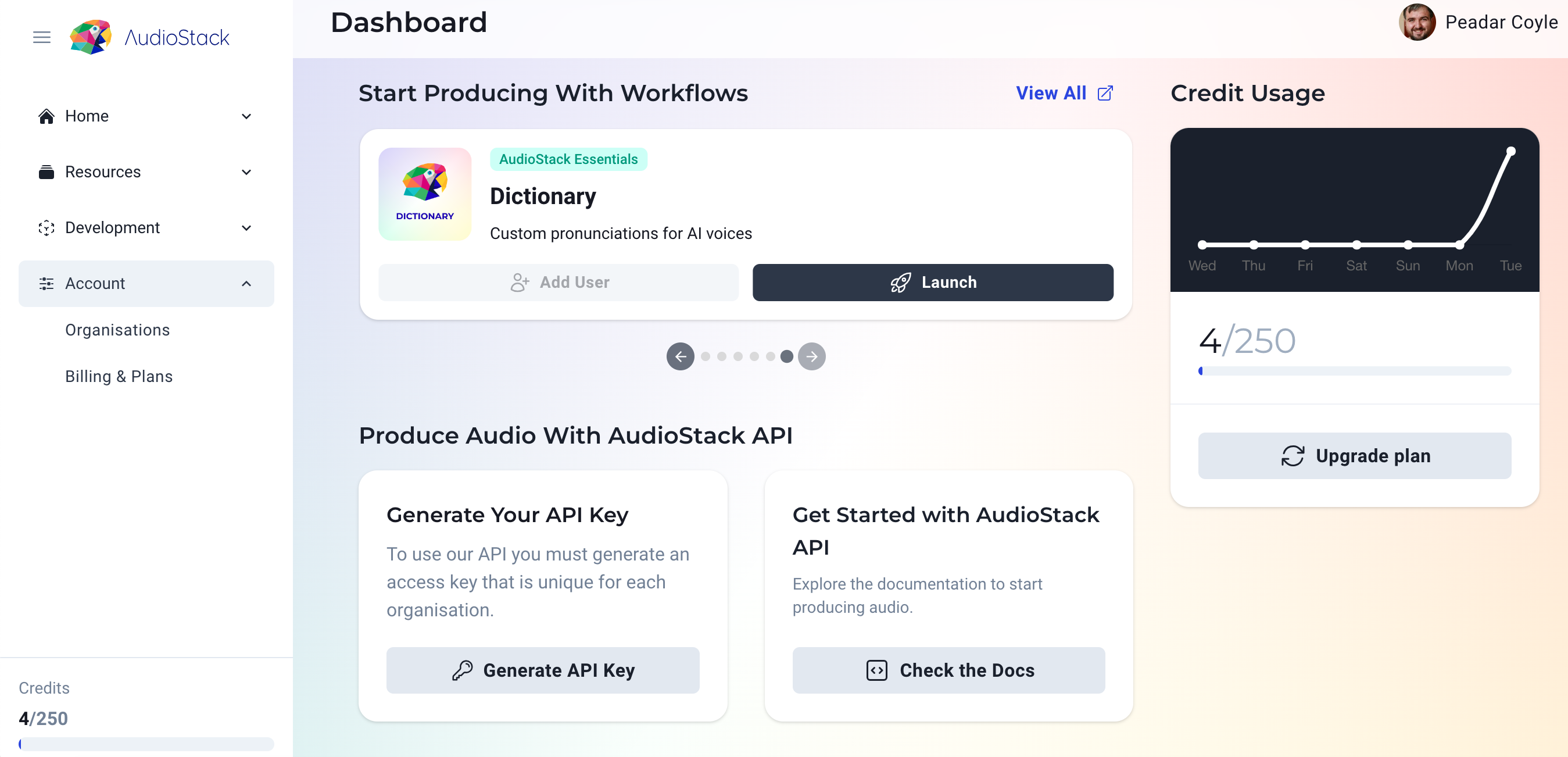

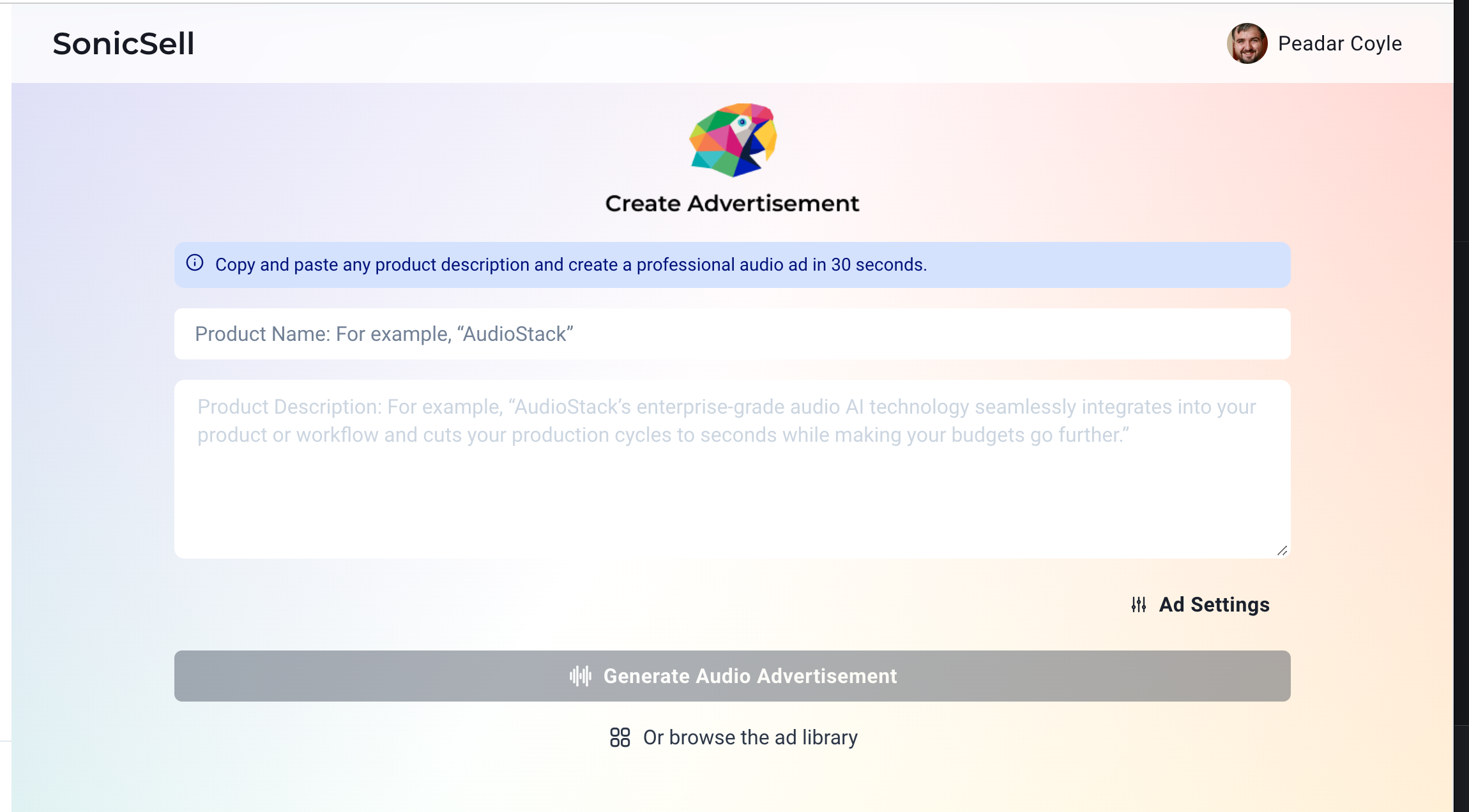

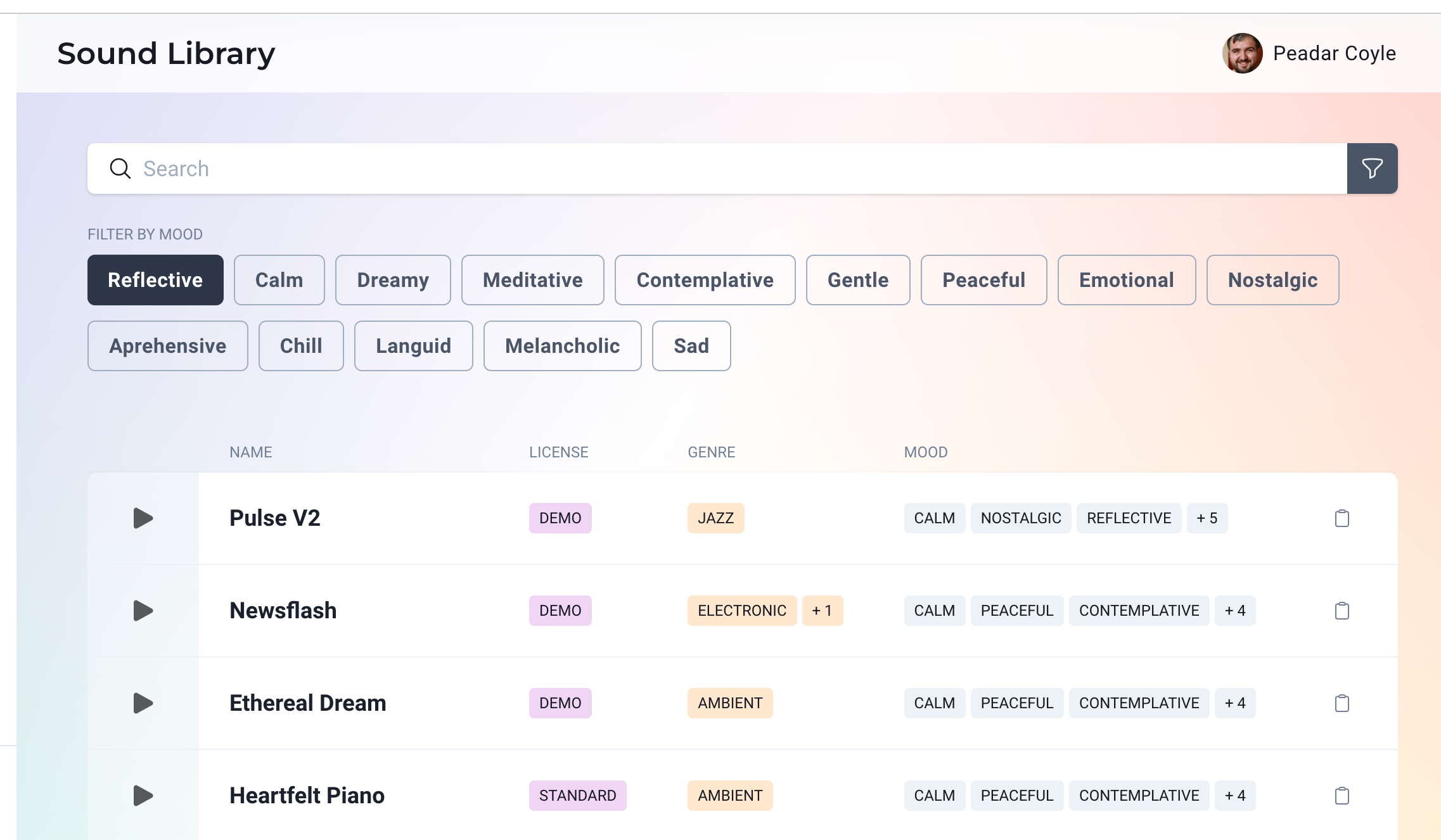

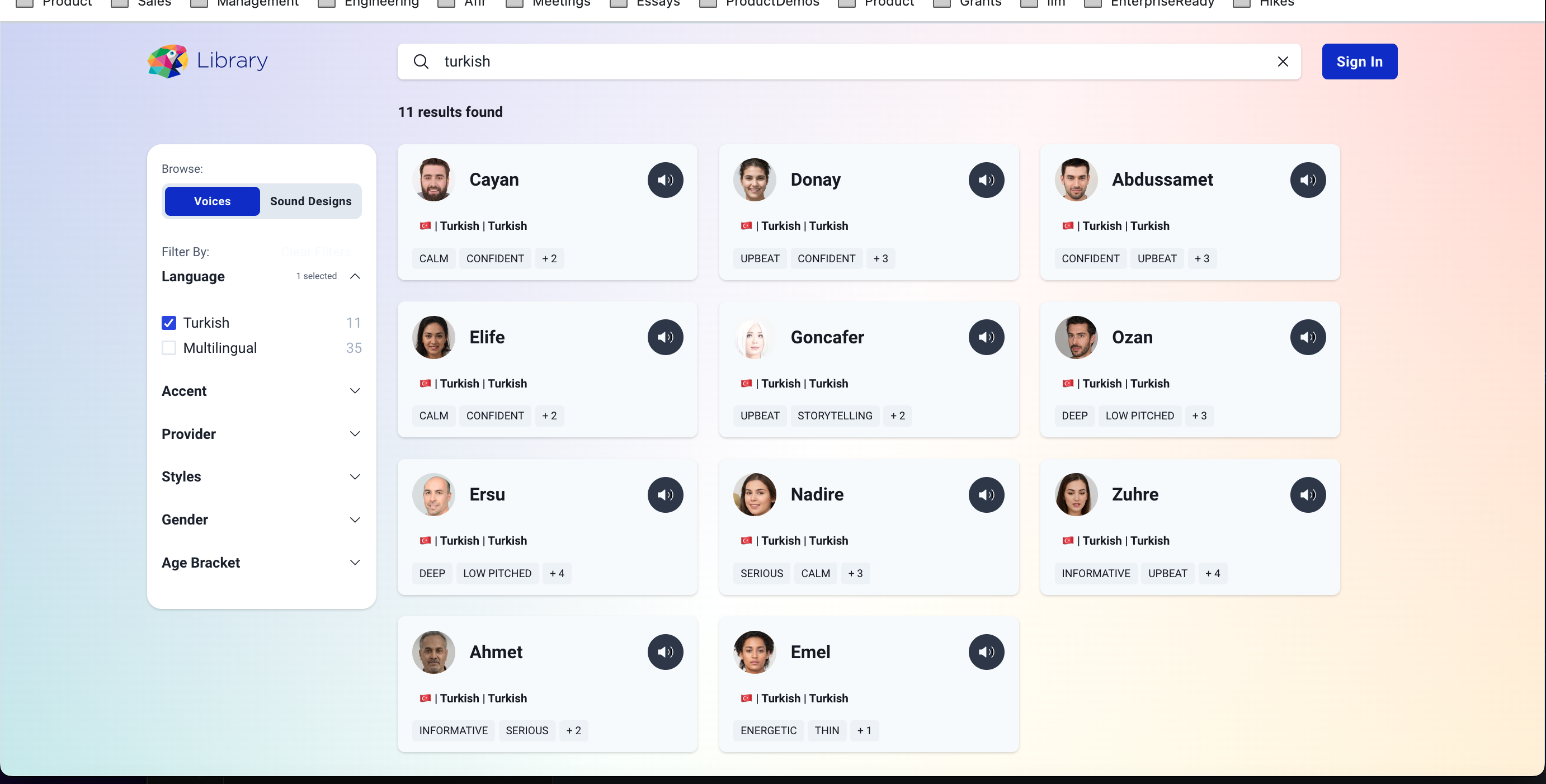

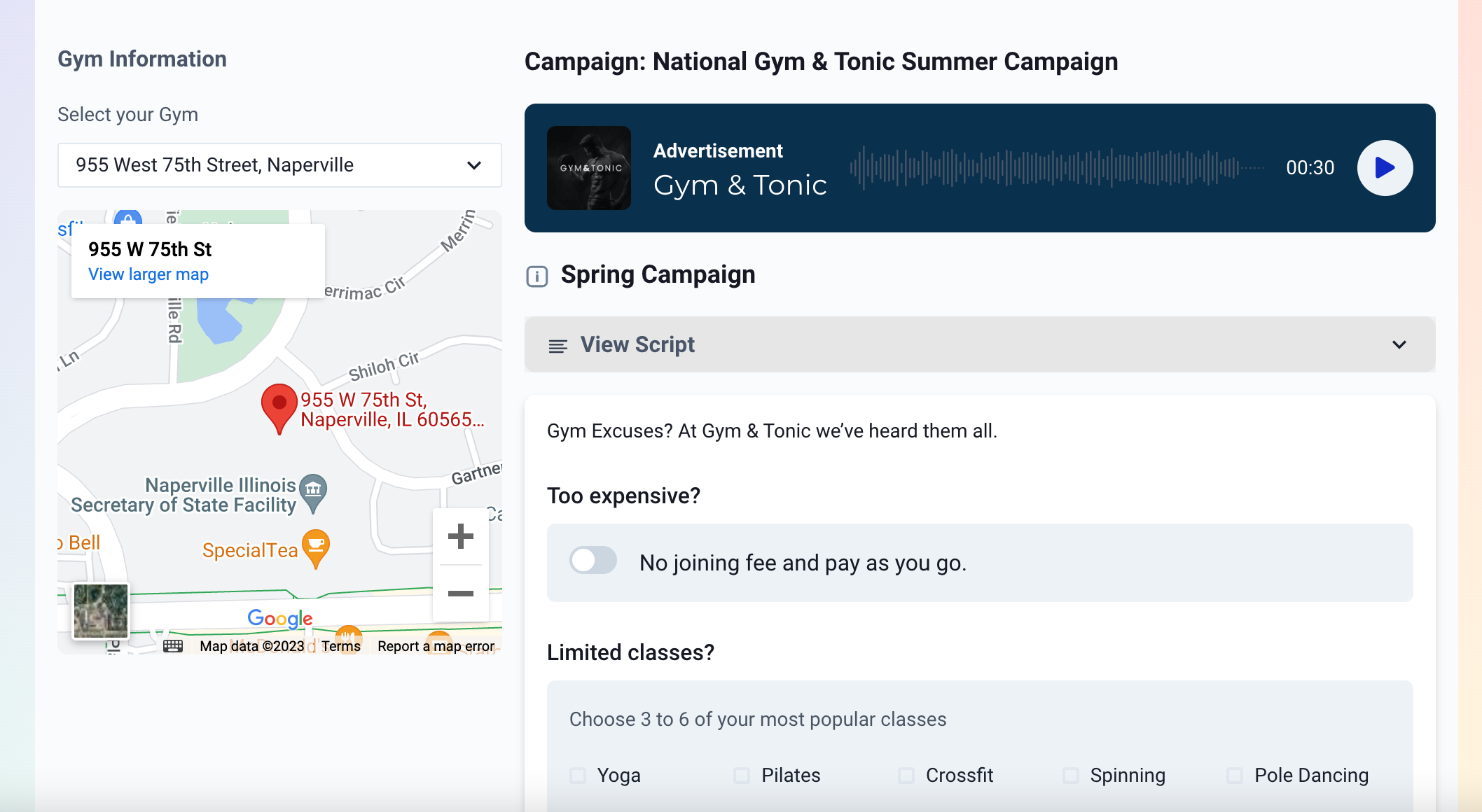

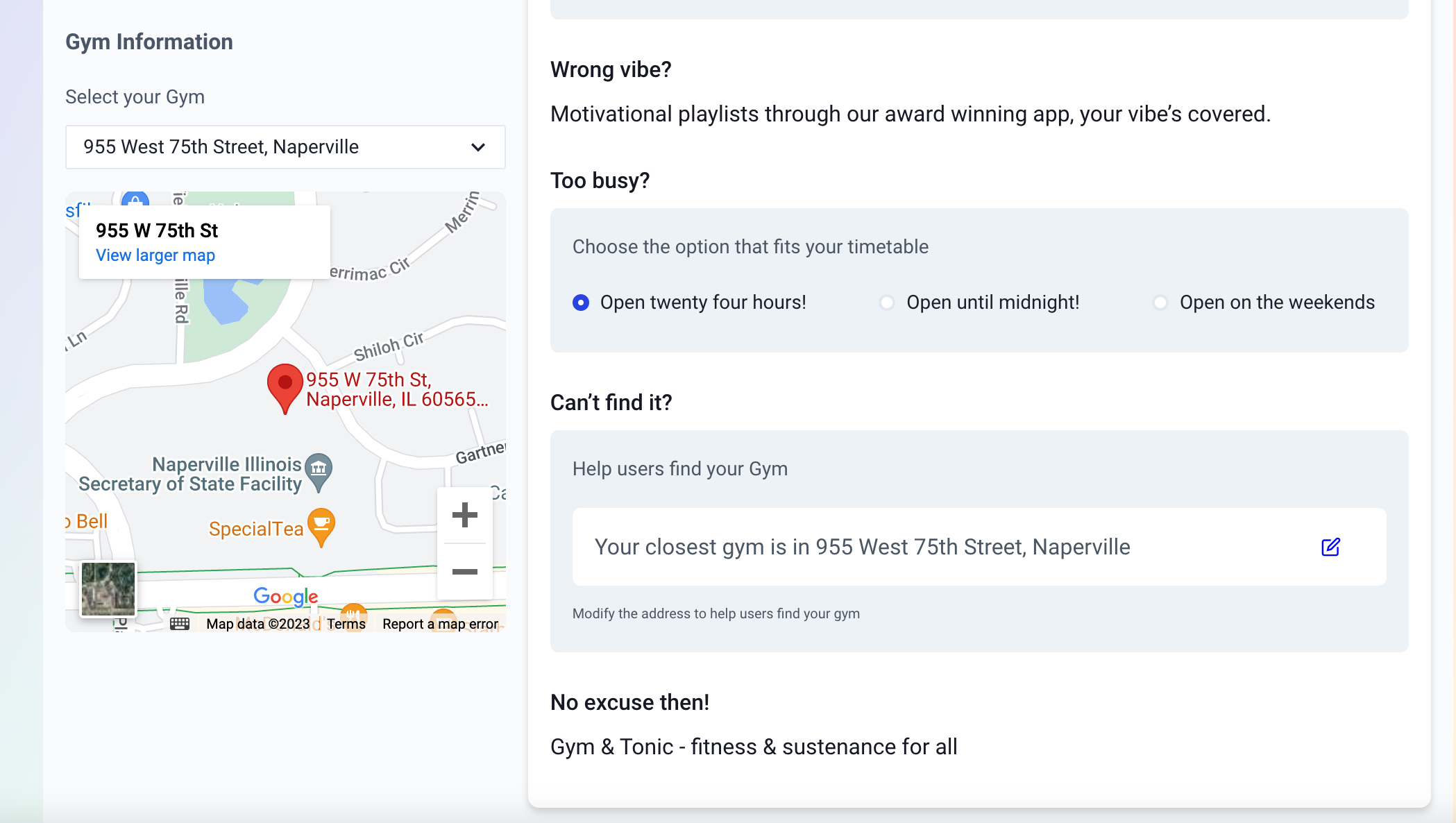

We're thrilled to announce the addition of cutting-edge AI voices to our repertoire, crafted using next-generation speech technology by PlayHT. These voices bring a new level of human-like emotion to text-to-speech generation, providing an immersive and expressive experience. These voices are English with various accents: 🇺🇸 American, 🇬🇧 British, 🇦🇺 Australian and 🇨🇦Canadian. Explore the expressive range of these voices, each uniquely crafted to elevate your content across various formats. From advertisements, to podcasts to training videos, our new voices offer versatility and authenticity. Try them all out in our Library with another 1200 voices!

❤️ We're committed to providing you with the latest advancements in AI voice technology, we have more state of the art AI voices coming next week ❤️

Deprecation Notice 😢

Later this week three of our voices will be deprecated 😔 : Renata, Jollie, Matthew. If you rely on any of these voices for your audio creation, please make sure that you migrate to a new voice before the end of next week. You can find all available voices in our Library. Worry not, because we have more voices announced very soon, watch this space 💯